I kind of thought of the UI as the product and then machine learning came along and the Machine learning was there to sort of help the UI and that shifted completely. Now AI is the product and the UI is there to to help the AI to capture better signal.

— Gustav Söderström, Co-President, CPO & CTO at Spotify, Source

It’s very clear that some version of the future includes a world where software actions begin to migrate from humans to AI Agents. How this manifests itself is likely a spectrum with two very different angles of approach that have implications on how you build a company moving forward.

Each approach has its own merits and are perhaps rate limited by technology development and human adoption. This creates complexity for startups that are aiming to build in a world that has any level of agent penetration and forces them to make implicit bets on how they imagine this future playing out and the pace at which it does.

Scenario 1: One UI to Rule Them All

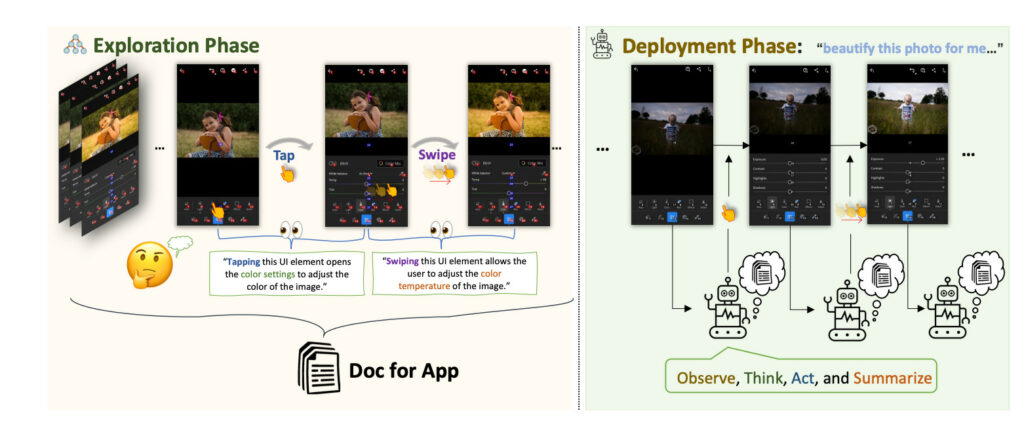

The first, and perhaps most obvious scenario is one in which agents take a simplistic, classical UI-driven, multimodal approach. Startups will use existing frameworks that humans use to navigate apps and integrate understanding into the agents to accomplish tasks that they believe should be automated and move to post-human.

This approach is enabled by things like GPT-4V (though some have seen varying success), Gemini Pro Vision (via tools like Self-Operating Computer) and a slew of open-source models + closed-source models to come. Most recently, a version of this was shown in AppAgent by Tencent which used some form of autonomous exploration and imitation learning to control a mobile phone.

This approach is predicated on a shorter-term moat and slower rate of change.

Diversity of ability and/or skill level (% correct actions and theoretically complexity of actions able to be completed) is what will win here and there are likely usage flywheels that can drive and expand competitive advantages based on the learning methods.

The main need to believe for Scenario 1 to win out is that the time between humans using their phone first-hand today and humans giving up a large # of actions to AI agents will be longer than many appreciate, or merely that AI adoption will be highly fragmented across use-cases.

Put another way, will humans will always want to do things like scroll Instagram, but perhaps won’t ever want to open TurboTax/Docusign/Concur/Foursquare/etc. again?

This will likely bring a very psychological approach to company building in software (something well-known in consumer but lesser used in enterprise), as companies will have to look at a spectrum of tasks and figure out if only dopamine driven tasks will still be done by humans in software, or if there will be some areas with human-in-the-loop that exist not because of utility but because of social/emotional dynamics.

There thus becomes an existential threat for companies that build software they believe is for humans, only for it to get steamrolled by software made for AIs.

Scenario 1 isn’t too dissimilar from the argument for humanoid robots, which is that the world is already built for humans and thus we should optimize on a mechanical level the human modality.

In theory, this sounds nice, however the overall cost of building a humanoid robot and all of the computation needed starts to look more daunting than many appreciate. In reality, when we look at the history of highly proficient “robots” we end up with purpose built, non-human modalities, to repeatedly accomplish a task at very high efficiency and skill level. Like a dishwasher/washing machine/making coffee/etc.1I found this demo that went viral this weekend somewhat ironic, as the robot test was of a robot using a Keurig (a literal coffee robot) to make coffee.

Enter Scenario 2.

Scenario 2: Agent-Specific UIs

Agent-specific UIs rest on the premise that agents shouldn’t interact with software (or “apps”) in the same way humans do because it is woefully inefficient and will rate-limit development progress on the app side at a time where software is entering a new AI-driven paradigm.2Perhaps the mark of a true e/acc person should be not using human UIs.

Shipping code without necessity to define perfect human UI/UX and the dark patterns and cohesiveness around these UIs results in a far faster iteration cycle while potentially increasing ROI. In addition, this paradigm allows startups to ship more features faster that accrue value to them ahead of shipping what many would deem a polished, unified, “product”.

Of course, new dark patterns could emerge that enable developers to capture and then encourage Agent behaviors via RL, in the same way we utilize humans with RLHF.

When looking at Scenario 2’s style of development you have a potential compounding advantage in the excellence of the org in either:

a) Integrating with public facing APIs or back-ends in whatever form they take, and iterating on the agents themselves (do they have specific understanding of a use-case, do they carry certain personality traits (link to personality), can they truly remove humans-in-the-loop for a given task, etc.)

b) Being able to sign partnerships to create custom access for a company’s agents by exposing non-public back-ends to a subset of “agent development” companies like OpenAI, Google, etc.

The path of a) likely could benefit startups or at least create a level playing ground between upstarts and incumbents.

The path of b) is where large AI companies and incumbents may be able to capture outsized value and build a significant moat due to their ability to have larger security and data management infrastructure, which then will create a very tight loop of BD/partnerships -> data gathering -> performance/customer utility.

Apple (and to a lesser extent other android OEMs like Samsung etc.) of course sit at an interesting point here with their control of the proverbial metal and will likely push further into using local compute to drive trusted autonomous behavior on-device.

With all of this said, the research path for Scenario 2 companies today likely would have to diverge as it’s very possible agent-driven research may not be as core or overlapping as broader frontier model LLM research when it comes to squeezing out cost efficiency of inference, latency, and perhaps certain types of actions as it relates to a given vertically-focused product. 3Theoretically, this is the premise Adept was founded under with their ACT models. Over time this will change as new frameworks are built and open-sourced or agent-behavior APIs are released.

It is my belief that the future lies in Agent-specific UIs (scenario 2). This paradigm is a far more intellectually interesting open space to explore for startups and most software companies.

Having seen what AI companies can do when they no longer need to worry about unified products versus singular tools, I think the rate of progress and malleability that building agent-first applications allows far outweighs the short-term complexity and adoption rate one could experience.

When looking at this problem set from a high level, again, the need to believe is surrounding technology development and human adoption of agents.

Within that there thus becomes a new fork in the road for startups and perhaps all companies who must make some form of choice surrounding back-end versus front-end optimization for the future, and may need to build “in the dark” without much user feedback as they expect to change human behavior in an unexpected way.

AI does incredible things mimicking humans already, but perhaps tomorrow the world we live in is one where the question isn’t about adapting to AI, but how AI reinvents our adaptation. Or said another way, not using the UI to drive performance of AI, but instead using AI to drive the performance of UI.

Recent Comments