Typically in open-source software the business model for venture-backed companies has been to release a public good and build managed services on top of it. This has worked with low capex and a set of contributors working for long periods of time. These companies and individuals maintain the core repo and build a community, both of which builds structural resilience amongst a group of core developers and becomes some form of a standard.1We can debate the semantics of this but let’s not.

With open-source AI (OSAI) labs you are running super high capex in order to build a public good (model) for some period of time, that you hope the community perhaps then goes to iterate upon. While certain labs create small communities, very few have broken out in language, instead coalescing around subreddits like r/LocalLLaMa and Hugging Face. However as models become more modular and hot-swappable at the inference layer, it’s likely that a given model (of which we can assume $100k-$1M of capex each at least) will become stale and be swapped out.

While this dynamic can and will likely push forward the overall rate of progress within AI, it’s unclear if it’s a dominant economic strategy. And depending on how horizontal your model is, these dollar figures can creep higher and result in 7-8 figures to “maintain” your place in the OSAI ecosystem if playing at the model layer.

This brings obvious complexity issues as to how a variety of labs predicated on open-source last long-term unless the goal is to do radical things like start open-source and then move closed-source, or build new forms of appstores where a series of models are commercialized and the OSAI lab becomes a take-rate driven model at the API layer. Both of these feel like a losing battle vs. OpenAI, Anthropic, Meta, Apple, Google, MSFT etc. especially as Meta allows free commercial usage of LLaMa-2 up until ~700 million monthly active users.2You could also go the path of building novel hardware around your open-source models. I like this approach and there’s currently a battle between Rewind and Tab on this right now, both of which I worry will end up hitting some limitations.

The obvious other answer is of course to build tools around your models and own the infra/ middleware layer and/or building custom models for enterprises or governments. This is compelling as we see more “novel” approaches to performance pop up that can increase inference quality and speed, including architectures like Mixture of Experts or deployment choices like Agents, each of which have basically no discernible and approachable infrastructure at scale today.3There are efforts to try this recently with OpenMoE amongst others which we are bullish on.

That said, the enterprise approach is one that a variety of proprietary, closed-source model providers will go after, especially as the Frontier Model companies start to see divergence in success. One can easily imagine a possible scenario where if OpenAI and/or Anthropic steamroll everyone on horizontal models, the next pitch for second-tier “Frontier” language model companies will be the custom-trained enterprise path in order to grow into multi-billion dollar valuations.

I’m net incredibly bullish on the open-source side of AI and my answer to many of these questions comes down to me perpetually screaming that this becomes a view on who can build the best process to advance proprietary model performance, the infrastructure to be malleable on the model side, and the best (and most opinionated version defining “best”) product to utilize both proprietary and finetuned open-source models over time.

In this world, I’m not sure the labs doing open-source model development win at scale, but maybe I’ll be wrong, so please challenge me on this.

Brand Moats

This leads me to my second view on OSAI models and broadly all AI labs and companies today, which is the increasing importance of brand moats.

While not a novel paradigm in open-source, developers have historically been far less fleeting in how they utilize fairly solidified OSS layers of the stack because of some form of network effect that spins up. This network effect is often a slow build and creates a superior product, that generates minimal revenue on its own, and thus becomes a public good that faces moderate but not intense competition for some short to mid-term period.4Again, there’s nuance here but just let me have this, i’m writing this in a hotel lobby at a conference while I recharge my social battery.

If we try to parse how this could play out in OSAI, crypto protocol development of all places may have some important lessons for companies to learn from.

What AI Labs can learn from Crypto Protocols

Crypto has faced quite different dynamics to traditional OSS, with protocols facing consistent forks as the crypto industry tries to financialize public goods and open-source value creation. This leads to a rolling ball of money that is incentivized to rotate between projects and usage that is mercenary at best.

However, as we’ve seen over the past few years, this hardcore financial incentivization rarely pulls developers or users for a long period of time. This is because protocols that are longer-standing or truly novel have done a great job at generating brand moats in a fairly recursive and tight-knit space. Sometimes this is due to superior technology, but other times it is merely elegance of design, Lindy effect, community, and a variety of other intangible factors.

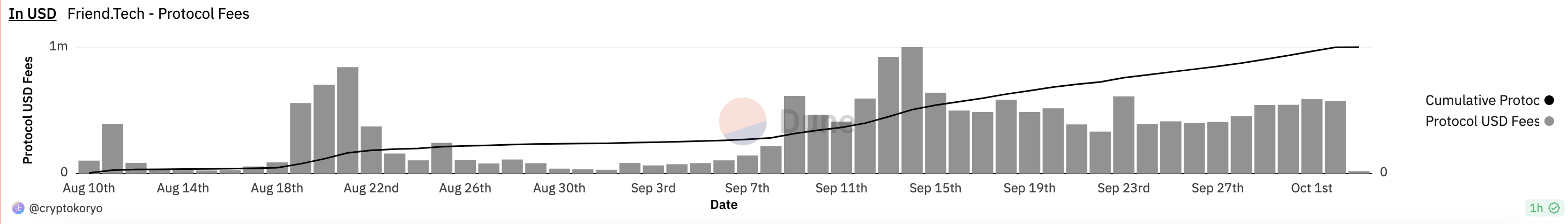

Most recently a great example of this is Friend.tech, a project that has done over $15M in revenue in the past ~2 months. As Friend.Tech has faced a variety of mercenaries forking their protocol, it largely has been unaffected due to a brand moat (and some product development pace) that is unassailable despite the simple mechanism of speculating on access to group chats via Keys being the core underpinning of the product.

Put more simply, crypto participants just like and trust Racer, the co-creator the application, and respect their native understanding of the community and their brand cache that comes with having now shipped multiple consumer crypto products that capture the zeitgeist of the industry. The dollars and users thus won’t flow as freely just because of incremental progress.

AI Brand Moats

At this stage of the OSAI cycle, the dynamics for open-source models are far more closely related to those that the crypto industry deals with than more traditional OSS software.

In a prior post I wrote:

What happens when you add personality or an opinionated aesthetic to a given AI tool is you create a community that feels allegiance to your product in a deeper way than just ROI, perhaps allowing for products to compete with larger, more general purpose models, or just better-funded organizations.

This community can be an immensely beneficial flywheel and perhaps a more important moat than we appreciated, as we’ve seen with the thousands of people contributing to the Midjourney and Runway communities over time, pulling out new experiences with prompts and showing everyone what is possible. These are one of the core things that compound within these startups outside of technical knowledge, data, and compute.

As we see daily OSAI models pop up that edge up performance or utility via things like longer context windows, better performance at fewer parameters, uncensored outputs, and much more, the open-source movement marches towards the minimum viable ROI to be built upon, narrowing the gap between the closed players.5There is even a spectrum here of open-sourcing the training data in addition to the weights, which has implications as well.

As individuals, groups, or companies begin to develop a suite of open-source models which can be used for a variety of use-cases, it’s likely that brand moats will become more important than most building in the space appreciate.

It’s important to note that brand moats are not built quickly, but instead are a result of many intentional steps by companies and the people that comprise them.

While many software companies have done great jobs building brand moats over time, the opportunity (and threats) in building a brand moat in AI is also more multi-faceted than prior paradigms as entire collectives of professionals wage war against the technology and everyone from consumers to enterprises look for figureheads to give them answers on everything from socio-economic shifts to timelines of breakthroughs and more.

In AI we’ve seen firms like Hugging Face utilize their Transformers library to then generate a network effect to then lift up their brand as the beacon for OSAI (originally starting as the core place for those interested in NLP). HF has become the de-facto home for open-source which has allowed them to accumulate material talent and capital at what some would imagine is ahead of traditional metrics.

We’ve also seen the synergy between network effects and brand moats being framed as directly correlated to performance increases across the fundraising pitches for all of the large AI Labs. Most of these financing decks claim that model size and data scale paired with RLHF, will create breakaway model performance that cannot be surpassed.

Lastly we’ve seen purely personal aesthetic choices start to enter the fray, including labs like Mistral just releasing their latest uncensored models on Bittorrent.

Personifying a Movement as a Brand Moat

Upon each major technological shift, the broader public increasingly looks to personify this shift. Elon with Space, Gates with Climate, SBF with Crypto, and many others. It has now become clear that controlling the narrative is important if you want your company to break through the noise of a novel category and accumulate as much attention and capital as possible.

Nobody has perhaps understood the value of these brand building dynamics and embodied this tactical approach better than Sam Altman who I would argue is running perhaps the largest scale tech-oriented PR campaign in history.

It’s likely that personifying the entire movement is a window that has closed, though others such as Mustafa Suleyman at Inflection are still attempting to do so by doing something I haven’t ever seen a pre-PMF startup founder do, publishing a book.6Ryan Hoover at Product Hunt oddly is the closest comp here, but I feel like he did this as PH was rising.

I largely view these broad-based “AI Thought Leader” attempts as futile efforts at this point, and instead believe that companies should aim for differentiating and honing their core message surrounding their company instead of boiling the entire AI ocean.7This doesn’t mean that Sam/OpenAI has “won” AI, just that it’s not really feasible or worthwhile for a startup to attempt to capture that zeitgeist in this moment when OpenAI has a clear lead

Some individuals and/or groups have done a good job of this by carving out a specific niche of a community within AI. I think of people like TheBloke who works on quantization of models as well as Jon Durbin who has pushed forward synthetic data tuned LLMs via Airoboros.

In our portfolio we’ve seen this done incredibly by multiple companies, including Runway who has empowered and elevated the stories of creatives as they build the most advanced tools ever for video (and creativity broadly), as well as Wayve who has pushed the bounds of safety and explainability in AVs as well as Embodied Intelligence with bleeding edge research and public demos.

The brand moats that companies build will likely only increase in importance as capital markets continue to kingmake a range of startups in a given space and talent begins to more heavily consolidate amidst fast failures from the AI boom cycle of 2022-2024. The leading companies will be large beneficiaries as the Talent → Performance → Brand → Capital flywheel spins faster and faster.

While this idea feels a bit fuzzy, there are many other approaches and vectors in which we believe people will be able to build brand moats within AI (and other emerging categories) over the next few years that aren’t covered here. It’s clear some strategies will be abandoned or dialed down based on where we are in a given technological cycle (remember how much Anthropic oriented towards owning safety and alignment early on), while others will be pushed on more aggressively and at a scale we haven’t seen (I’m waiting for an AI Lab to politicize these models and mainstream the claim that censored models are anti-free speech8Especially as we’re seeing their performance outpace many aligned OSAI models).

Conclusion?

Across each of the topics in this post, I continue to go back to the view that building in AI is a perpetually changing dark forest. A dark forest where companies must progress across multiple novel variables surrounding long-term value capture in perhaps the most complex industry we’ve had startups building in at scale in some time.

While lots of capital will push towards well-pedigreed OSAI labs building models as public goods, the enduring value capture continues to feel tenuous at best and could end in a familiar ground of largely duopolistic market dynamics9If we look at mobile as a parallel, it effectively is one open-source layer (Android) and one closed-source (iOS). The genuine economic power of OSAI, while formidable in propelling community evolution, may end up only in areas where labs navigate untouched or unpopular domains, embarking on areas anchored by non-consensus infrastructures or unprecedented model frameworks/approaches.

At the same time, as the entire space gets pushed and models continue to evolve, it’s likely that where companies opt to build and maintain moats continues to shift. On one hand, many are dubious of most AI moats and believe it will be a race to the bottom and destructive for all startups. However perhaps a more nuanced take is that AI will be mostly about long-term survival.

In a world in which user’s dollars will bounce between applications, API providers, and a variety of different types of companies, perhaps the durability of revenue won’t come from continual incremental improvement, but instead from enduring narratives that are able to stand the tests of technological time and the deaths by a thousand cuts via an overcrowded ecosystem.

As we look at the directional line of progress, it’s not clear there’s a perfect solution to any of these questions, but it’s clear there are under-explored paths and new dynamics that we all must continue innovating on today, to survive till tomorrow.

Recent Comments